Recently, I attended a Congress of Human Resources. On stage, the speaker appeared charismatic, professional, and he gave quite an entertaining presentation. He held the audience's attention by constantly making jokes and adding interesting personal anecdotes.

I sat through the entire speech waiting for the speaker to reach a climax, make a solid point or establish a noteworthy conclusion. In the end, I was left unsatiated, with the feeling that while the attendees had all had a good time, no real learning had actually taken place.

This same situation occurs during trainings for countless companies. In-person trainings involve a fun and enjoyable classroom environment, but often lack applicable content. Similarly, online trainings are all too often attractive eLearning courses with impressive graphics and animations that still fail to teach meaningful information.

We must remember that the ultimate goal of a training course is to learn. But how do we know that we have fulfilled this goal after delivering it? Here, it is important to mention one key element: evaluation.

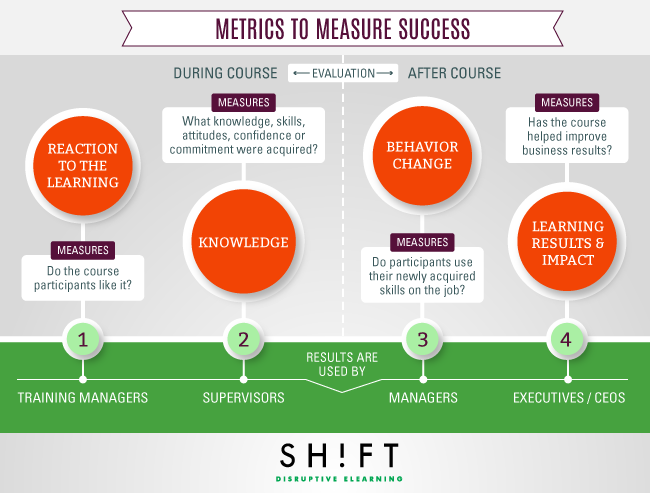

There are many different approaches to evaluating the effectiveness of an eLearning course, but they all share a common first step: identifying success metrics. Kirkpatrick's taxonomy is one seasoned model that continues to receive widespread use. Developed by Dr. Don Kirkpatrick in the 1950s, the model originally contained four levels of training evaluation. Now, the levels have been clarified by Don, Jim, and Wendy Kirkpatrick to form what is called "The New World Kirkpatrick Model". Since the concept has continued to evolve alongside training, it remains a relevant and robust evaluation framework.

This evaluation model is applicable to both classroom training and eLearning.

So to make sure you know how successful your eLearning program really is, check these metrics:

1. Metric #1: Reaction to learning

What it measures: Level of satisfaction, interest and engagement of students.

This level assesses what learners think and feel about the training or eLearning course. But beyond simple satisfaction, New World Model additions measure Engagement (the degree to which participants are actively involved in and contribute to the learning experience) and Relevance (the degree to which participants will have the opportunity to use or apply what they learned in training on the job).

The evaluation of this level measures how participants react to a given training activity. Appropriate questions include: "What was your level of satisfaction after participating in this course?", "Did you feel engaged?", and "Was it relevant?"

How it is done: This evaluation is carried out through reaction surveys, feedback forms or questionnaires, which generally determine the categories to measure and use scales of satisfaction, such as: excellent, very good, good, fair, poor.

However, these evaluation tools tend to be poorly designed and unnecessarily lengthy. It's also easy to ask the wrong questions, leading to data that might not be useful. You can read this article to become acquainted with what to ask and what not to ask.

What information it conveys: This evaluation provides important information about the quality of the course, allowing for immediate adjustments. This could include information about the instructor’s performance, the quality of the materials and the content, etc.

Who the results are for: Training managers.

Evaluation goal: The goal at this stage is merely an initial endorsement by participants of the training. This evaluation is meant to identify any significant problems, not to determine the ultimate effectiveness of the training.

2. Metric #2: Knowledge

What it measures: What knowledge, skills, attitudes, confidence or commitment were acquired by participants?

According to the authors of the book, learning cannot occur without change in behavior. Therefore, evaluating the degree of learning achieved by the participants as a result of their participation in a training process is indispensable.

How it is done: This evaluation is performed before beginning and after finishing the course, in order to compare the increase in knowledge and assess what changes have taken place. Its purpose is to determine if the participants have actually acquired the knowledge and skills that they were meant to during the training.

It generally consists of 1) a written test to measure knowledge and attitudes and 2) a performance test to measure skills. However, evaluators should consider using scenarios, case studies, sample project evaluations, etc, rather than just test questions.

What information it conveys: From this evaluation we can detect where the training was successful and where it has failed, in order to plan other techniques or methods to improve the quality and quantity of learning in future programs. In addition, by knowing the extent to which learning has occurred, we can expect certain results in the third level of assessment - namely, a change in behavior.

Who the results are for: Supervisors, line managers.

Evaluation goal: Confirmation that learning has occurred as a result of the training.

3. Metric #3: Behavior change

What it measures: Do participants use their newly acquired skills on the job?

This level measures how much learners are applying new knowledge back at their jobs. It measures the change in behavior, or how the training affects performance. It’s all about TRANSFER.

The New World Kirkpatrick model suggests that to achieve transfer of knowledge, learners need incentives. Incentives, or drivers, require processes which reward and reinforce the performance of certain critical behaviors. These systems not only support learners who apply what they've learned to their jobs, but they also encourage a sense of responsibility among those who successfully perform the behaviors. Ultimately, tangible incentives are the force driving work. Learners who receive real rewards for learning and applying new knowledge to better their performance are more likely to put in the amount of effort required to change their behavior.

How it is done: This step is done through observation, performance benchmarks, interviews and/or surveys to participants, their immediate superiors, peers, subordinates and others who may be involved or interested in the process of behavioral change. Preferably, you should wait for some time after the training event to take this measurement; the author recommends two to three months.

What information it conveys: This step allows us to measure if the knowledge, skills and attitudes learned were ultimately transferred to the workplace. And if they weren’t, then it allows us to identify the reasons why and make adjustments. For example, it may be that the knowledge was acquired, but the work climate and conditions are not conducive for putting it into practice - perhaps the boss does not see it as immediately useful, or the worker hasn’t dedicated enough time, etc.

Who the results are for: Managers.

Evaluation goal: To determine that learning has affected behavior or on-the-job performance.

Here is a step-by-step plan for implementing Level 3 in your training initiatives.

Also, find more info here.

4. Metric #4: Results and Impact

What it measures: Did it impact the bottom-line? Has the course helped improve business results? Did the targeted outcomes occur as a result of the training experience and follow-up reinforcement?

Organizations often talk about ROI (Return on Investment), but New World Kirkpatrick Model considers ROE (Return on Expectations) a much more useful concept. Unlike ROI's narrow focus on money, ROE's broader focus on expectations leads to a long-lasting result. Kirkpatrick asserts that rather than asking limited questions like "Will I get my money back?", it is much better to consider results-oriented questions, like "What should the result look like?" or "What do we want to change in our organization?"

It is also possible to think of Return on Expectations as the deliverables resulting from a successful training initiative which demonstrate to key business stakeholders how well their expectations have been met.

How it is done: The value that training professionals are responsible for delivering is defined by stakeholder expectations. Therefore, this step is completed by interviewing those involved with the intended impacts. An example is requesting information from supervisors and managers on the number of rotations before and after imparting the training program.

It is imperative that the training professional and the stakeholders enter a negotiation process, so that the training professional can make sure that stakeholder expectations are realistic and achievable with the resources at hand. Thus, you must ask stakeholders questions which clarify their expectations regarding all four evaluation levels, beginning with Level 4. Since stakeholder observations are typically fairly general, you must convert them into observable, measurable success outcomes. This can be done by asking stakeholders what success would look like to them. These outcomes are then used as the Level 4 results and become your collective targets for accomplishing a return on expectations.

What information it conveys: This measurement provides tangible evidence or indicators that allow us to nurture training needs.

Who the results are for: Department managers, execs, CEOs.

Evaluation goal: Confirmation that the training has had the desired results within the organization.

Final Note:

It is useful to note that the four levels can be used in reverse during the planning process to eliminate two problems that are common within many different initiatives - focusing on learning objectives rather than strategic goals, and never actually making it to Levels 3 and 4.

Do You Know How Successful Your eLearning Program Really Is?